VocalTractLab

The state-of-the-art articulatory synthesiser VocalTractLab (version 2.3) is available at vocaltractlab.de

You may also be interested in these source repositories which separate the graphical components and provide solutions for GNU/Linux: API, GUI.

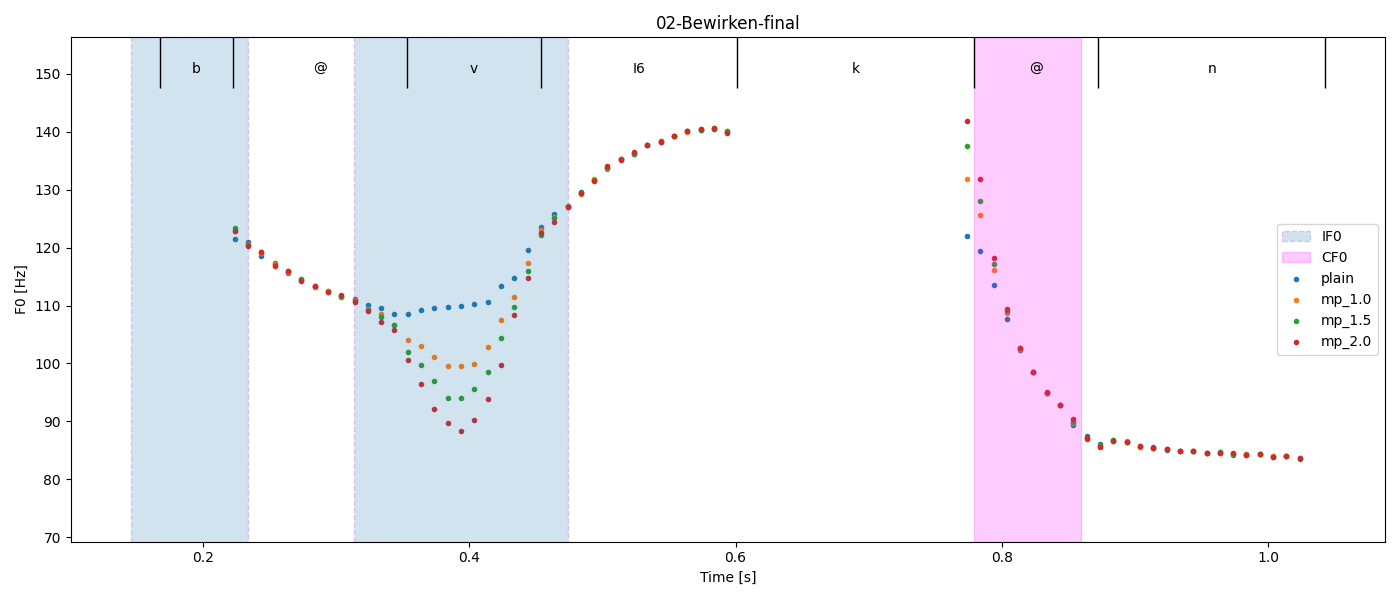

PyVTL

A set of Python bindings for VocalTractLab makes it easy to setup experiments using Numpy, Pandas, SciPy, Praat, and Matplotlib for visualisation:

Find the code and experimental setup for an analysis of microprosody here: github.com/TUD-STKS/Microprosody

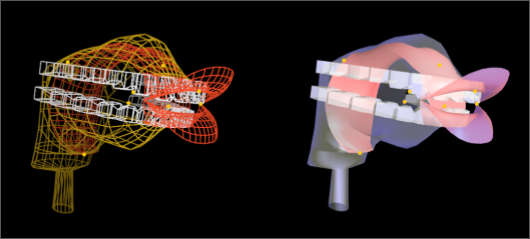

Evoclearn

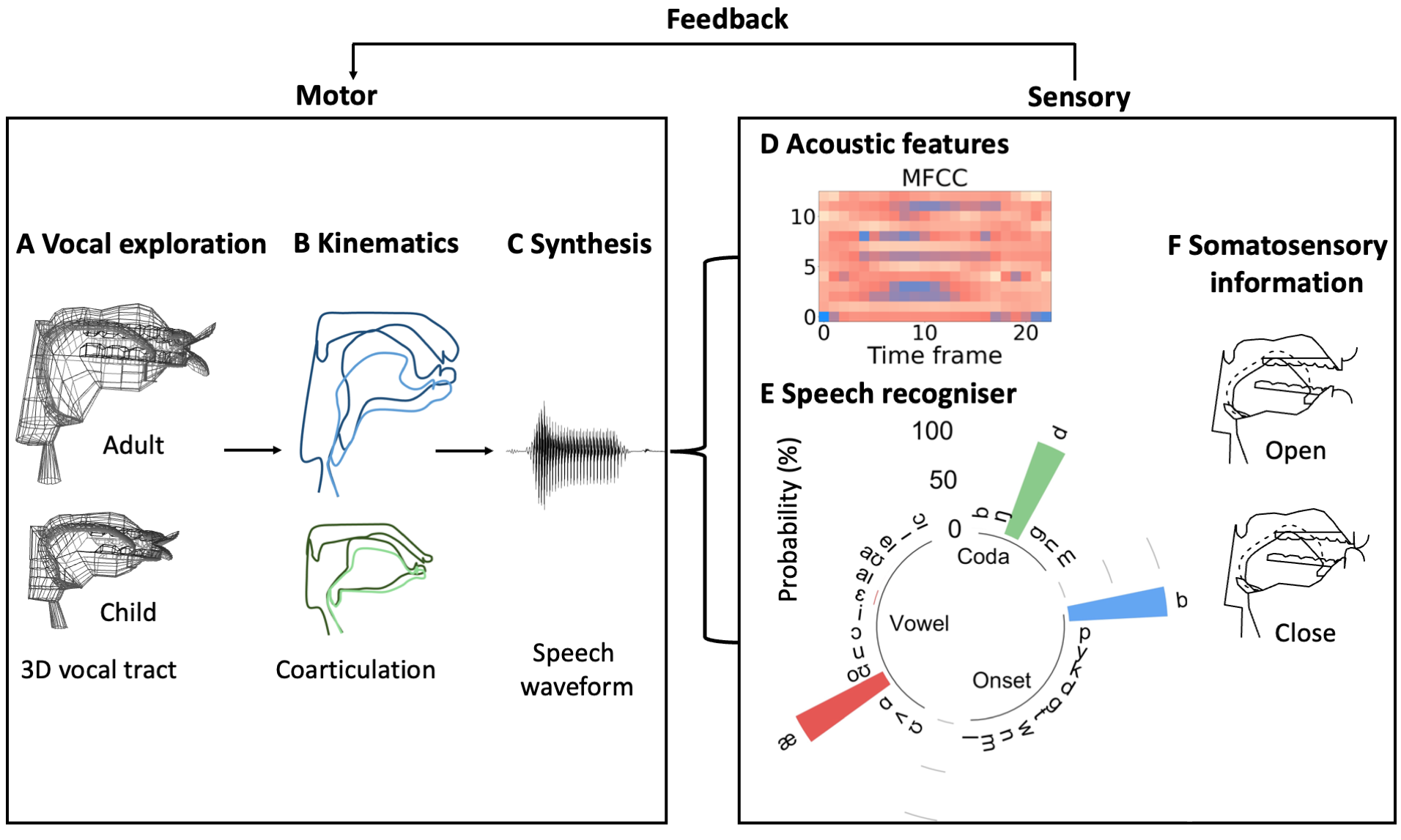

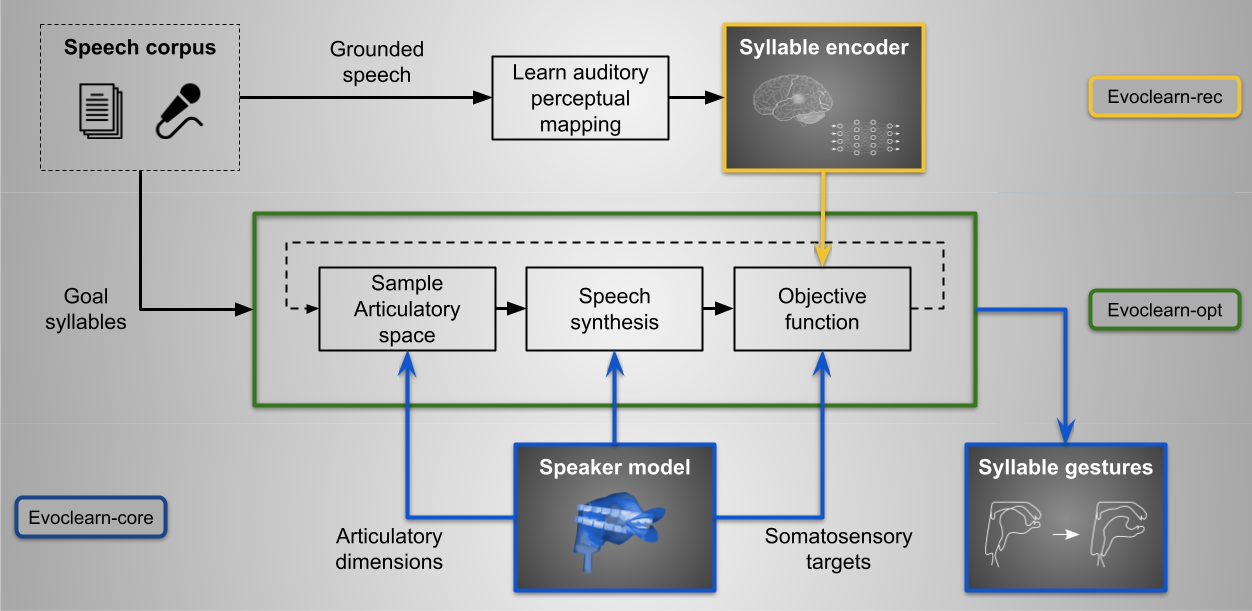

- The above simulation of vocal learning with vocal tracts of different sizes is presented here: gitlab.com/Anqi_Xu/evoc_learn

-

Vocal exploration is implemented as an optimisation process, as illustrated above, by the following Python packages: evoclearn-core, evoclearn-rec, evoclearn-opt

-

These packages can be installed from the Python Package Index and a complete example is available as a Python notebook: Quickstart Guide